Introduction: The Blue Circle and the End of Privacy?

It started with an inconspicuous update. Suddenly, it appeared: a small, shimmering blue circle in the chat overview or directly above the 'New Chat' button. For millions of WhatsApp users in Germany and across Europe, this symbol marked the arrival of Meta AI into their most private communication channel. What's being marketed as a useful feature for recipe suggestions or quick web searches has triggered a code red alert among data protection officers and IT managers at companies worldwide.

The central question everyone is asking right now is: How does WhatsApp AI privacy actually work? Is Meta now reading my messages? Are my company secrets being used to train the next language model? Understanding WhatsApp data protection has become essential for every business operating in digital spaces.

The reality is complex. While Meta insists that private messages remain private, the technical architecture of AI chats tells a completely different story. For private users, this is annoying; for businesses, it can be existentially threatening. In this article, we not only analyze the risks and show you how to disable WhatsApp AI (or at least restrict it), but we primarily illuminate the 'Blue Ocean' opportunity for businesses: How you can leverage the efficiency of AI without surrendering your data sovereignty to a US corporation.

How Secure Is Meta AI on WhatsApp Really? (Privacy Analysis)

To understand the risks, we first need to clear up a widespread misconception: the myth of continuous encryption for AI interactions. This distinction is crucial for any organization considering AI customer service implementation.

The End of End-to-End Encryption (in AI Chats)

WhatsApp built its reputation on the promise of end-to-end encryption (E2E). Technically, this means: Message A is encrypted on your phone and only decrypted again on the recipient's phone. Even WhatsApp (and thus Meta) cannot read the content in between because they don't possess the key. According to WhatsApp's official documentation, this encryption applies to personal chats.

With Meta AI, things are different.

When you interact with the blue circle or mention the bot in a group with `@Meta AI`, the E2E encryption no longer applies in the traditional sense. As explained by Cybersofa security experts, the process works as follows:

- Transport Encryption: The message is encrypted when sent to the server (no one on the WiFi can eavesdrop).

- Server-Side Decryption: For the AI to respond, the message must be decrypted on Meta's servers (usually in the USA) and processed in plain text.

- Processing: The language model (LLM) analyzes the text to generate a response.

This means: Meta is reading along. While the company claims to use this data 'only to improve services,' this is precisely where the problem lies. The implications for businesses implementing Conversational AI solutions are significant.

'Legitimate Interest': How Meta Trains on Your Data

Meta bases its processing of user data for AI training on so-called 'Legitimate Interest' (Art. 6 Para. 1 lit. f GDPR). This is a legal maneuver. Instead of explicitly asking each user for permission (opt-in), Meta assumes that training the AI is in the company's interest and does not outweigh users' rights. According to Ombudsstelle Austria's analysis, this approach raises significant GDPR concerns.

What data is being collected?

- Prompts & Inputs: Everything you write to the AI.

- Feedback: How you react to the responses.

- Metadata: When and how often you use the AI.

- Public Data: Meta has announced it uses public posts from Facebook and Instagram to train and improve context for the WhatsApp AI.

As reported by IAmExpat Germany and Facebook's official privacy policy, this data collection is extensive. For businesses, this is a deal-breaker. If an employee casually asks Meta AI: 'Summarize this meeting protocol for me' or 'Write a response to this customer complaint,' internal company data flows directly into a US corporation's training pool.

All AI conversations are decrypted on Meta's servers

No true end-to-end encryption for Meta AI interactions

Potential data sources for Meta's AI training

Guide: How to Object to Meta AI Data Processing (Step-by-Step)

Many users are desperately searching for a way to remove the blue circle. The bad news first: A complete deactivation of the UI elements is often not provided in current app versions. As Focus Online and Tech-Now report, Meta wants you to use the AI.

The good news: You can object to the use of your data for AI training. This is your legal right under the GDPR (Art. 21).

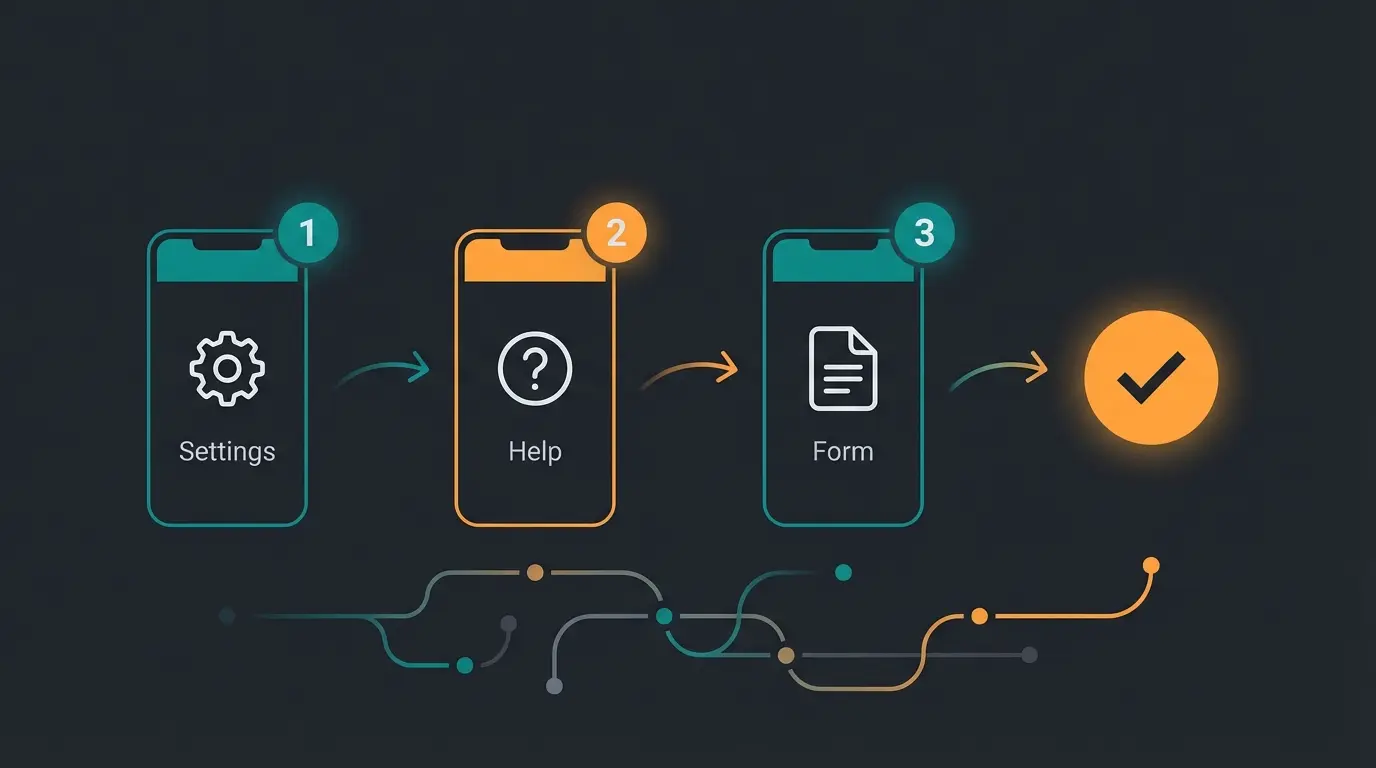

The Path to the Hidden Objection Form

Meta has buried the form deep within the menus. Here's the direct path (as of late 2025):

- Open WhatsApp and go to Settings.

- Tap on Help -> Help Center.

- Search for 'AI' or 'Privacy'. Often you need to scroll all the way down or click 'Contact Support' to access the legal notices.

- Look for the item 'How can I object to the processing of my information?' or a link to the Privacy Policy for Generative AI.

- There you'll find a link to the Objection Form (often external on the Meta website).

According to the German Consumer Protection Center (Verbraucherzentrale), you must be logged in (to Facebook/Instagram) or verify your phone number to complete the process.

Cheat Sheet: Template Text for Your Objection

In the form, you'll be asked for a reason. While according to GDPR this isn't mandatory for objections against direct marketing or profiling, Meta often requires an explanation of how the processing affects you 'in your particular situation.'

Use this template to maximize your chances (Copy & Paste):

Pro Tip: This objection prevents the training with your data. However, if you actively chat with the bot, the data is still processed to answer the query (processing for contract fulfillment). The only safe way is: Don't click on the blue circle. Understanding these nuances is essential when considering Shopware data protection and other e-commerce privacy requirements.

The Risk for Businesses: Why Meta AI Isn't Suitable for Support

While private users 'only' risk their privacy, businesses using WhatsApp Meta AI risk data protection violations that can result in fines in the millions. Any company considering how to create a WhatsApp bot must understand these fundamental differences.

1. The GDPR Nightmare

As soon as an employee uses Meta AI on their work phone, they're processing business data through a server not under the company's full control. There is no Data Processing Agreement (DPA) for using the consumer AI in the WhatsApp Messenger that meets strict German requirements. Data flows to the USA. While the 'Data Privacy Framework' exists, the legal uncertainty for sensitive customer data is massive. According to analysis from Bolex Legal and Cookiebox.pro, this creates significant compliance exposure.

2. The Hallucination Risk (Brand Safety)

Meta AI is a generalist. It was trained on the 'entire internet.'

- Scenario: A customer asks: 'Is your product vegan?'

- Meta AI Response: 'Yes, most products of this type are vegan.' (Based on probabilities, not your product datasheet).

- Reality: Your product contains beeswax.

- Consequence: Legal warning, customer loss, social media backlash.

Meta AI doesn't know your specific product data. It 'guesses' based on training data. For professional product consultation, this is fatal. This is why companies are increasingly turning to specialized WhatsApp AI chatbots that use controlled data sources.

3. Data Leakage

Similar to the 'Samsung-ChatGPT incident,' employees might be tempted to copy code snippets, strategy papers, or customer data into the chat to have them 'quickly summarized.' This data then potentially becomes part of Meta's knowledge repository. As AI employees are evolving in the workplace, establishing clear boundaries between consumer and enterprise AI tools becomes crucial.

Discover how professional AI solutions keep your customer conversations secure while delivering intelligent product consultation. GDPR-compliant, trained on YOUR data only.

Explore Secure AI SolutionsConsumer AI vs. Professional AI: The Critical Difference

Here lies your 'Blue Ocean' opportunity. The market often doesn't understand that 'AI in WhatsApp' doesn't equal 'Meta AI.' There's a fundamental technical and legal difference between the consumer bot and a professional business solution. Understanding how AI chatbots transform businesses requires recognizing this distinction.

Comparison: Meta AI vs. Professional AI Solution

| Feature | Meta AI (Consumer) | Professional AI Solution (Business API) |

|---|---|---|

| Access | Via private WhatsApp App | Via WhatsApp Business API (interface) |

| Data Source | The entire internet + User data | Only your company data (Isolated silo) |

| Training | Learns from your inputs (Active Training) | No training with customer data (Static RAG) |

| Encryption | Transport encryption (Meta reads along) | E2E to Business Provider (GDPR-compliant) |

| Control | Meta decides what the AI says | You control 'Tone of Voice' and knowledge |

| Legal Security | Gray area / Risk | DPA possible, server location DE/EU selectable |

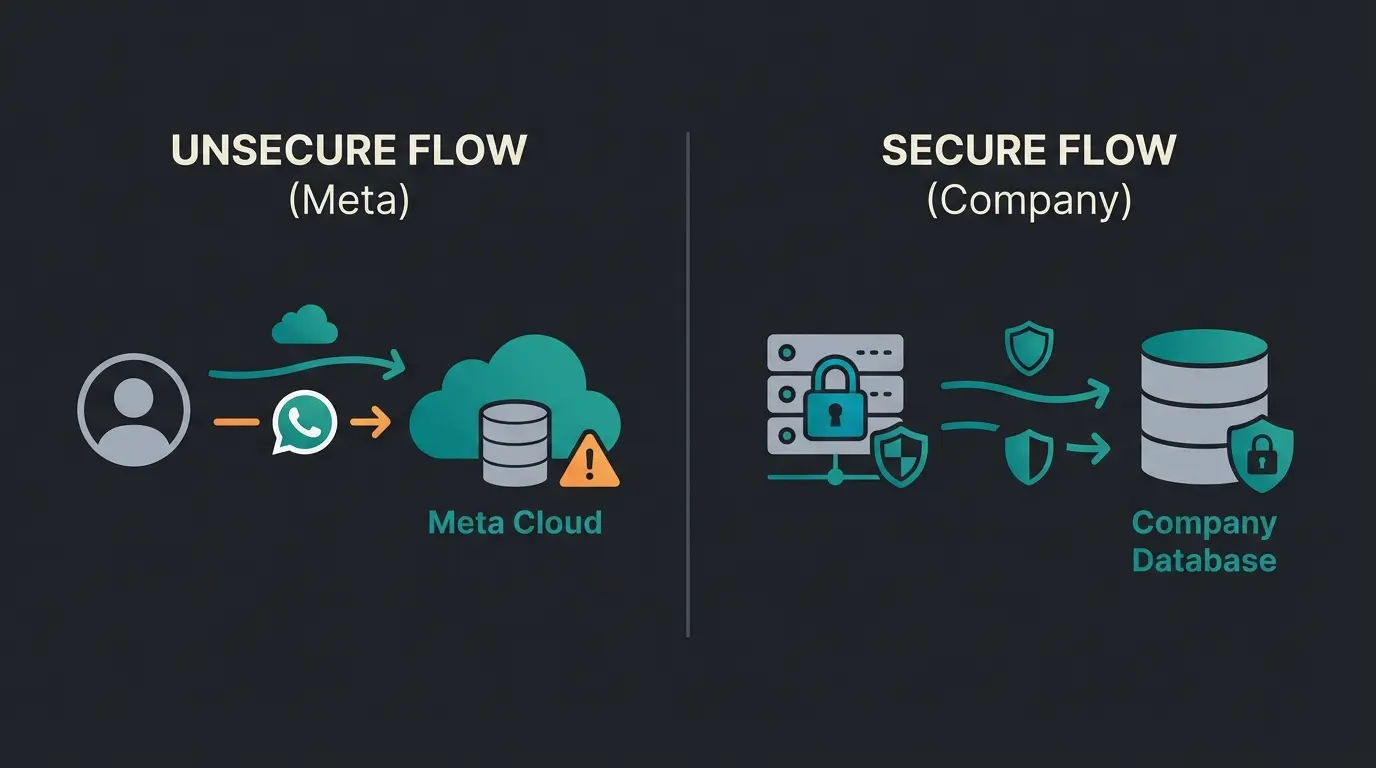

Visualizing the Data Flow

Imagine the path of your data like this:

User → WhatsApp → Meta Server (USA) → Data decrypted, analyzed, stored & trained → Response. Your company knowledge ends up in the big pool.

User → WhatsApp Business API → Your Secure Server (Middleware) → Match with your database (RAG) → Response. Meta only serves as 'postal carrier.'

As documented by Facebook's Business Platform, the Business API operates under fundamentally different privacy standards than the consumer application. The content is generated by your AI on your controlled server. Meta only sees the encrypted transport.

Using AI Securely for Product Consultation in WhatsApp

The solution isn't to ban AI, but to use the WhatsApp Business API in combination with specialized AI. Companies looking to implement an AI Chatbot for E-Commerce need to understand the technology that makes this possible.

The Technology: RAG (Retrieval-Augmented Generation)

Instead of a chatbot that 'creatively' invents texts (and hallucinates), professional solutions use RAG:

- The customer asks a question.

- The AI searches exclusively in your uploaded PDFs, manuals, and FAQs for the answer.

- The AI formulates the response based on these facts.

- If it finds no answer in your data, it says: 'I don't have information about that, should I connect you with an employee?'

This approach aligns with the compliance requirements outlined in the EU AI Act, ensuring transparent and accountable AI operations.

Step-by-Step to Secure AI Consultation

- Switch to the API: Don't use the WhatsApp Business App on your phone, but a 'Business Solution Provider' (BSP) that provides access to the API. According to Sofortdatenschutz, this is the foundational step for GDPR compliance.

- Build a Data Silo: Upload your product knowledge into a secure environment (e.g., vector database on German servers).

- Configure Agents: Create an AI agent with strict instructions (System Prompt) to never speculate.

- Integration: Connect the agent with the WhatsApp API.

For businesses ready to implement this approach, understanding the WhatsApp Business API chatbot ecosystem is essential.

Conclusion & Checklist for Privacy-Conscious Businesses

The blue circle from Meta AI is a toy for private users, but a trap for businesses. The convenience of 'just quickly' asking the AI in the messenger is paid for with the loss of data sovereignty. But that doesn't mean you have to forgo AI entirely. The key lies in separating infrastructure (WhatsApp as a channel) from intelligence (your own AI).

Checklist: Is Your Business Secure?

- Employee Policy: Is the use of Meta AI on work phones explicitly prohibited?

- Objection Filed: Have you (and your employees) objected to AI training in the privacy settings?

- Channel Separation: Do you use the WhatsApp Business API instead of the app for customer communication?

- Data Sovereignty: Do you know exactly on which servers your AI responses are generated?

FAQ: Common Questions About WhatsApp AI & Privacy

Currently, the icon cannot be completely removed in standard app versions, only minimized or ignored. The most effective protection is simply not clicking on it. While you can adjust some privacy settings, the Meta AI interface element remains visible in most versions.

Meta states that private chats (person to person) continue to be E2E encrypted and are not used for training. The risk only arises when interacting with the AI or in groups where the AI has been activated. However, metadata about your usage patterns may still be collected.

Yes, with proper implementation through reputable partners. Here, Meta primarily acts as a data processor for transport, while data processing takes place in your controlled environment. A proper Data Processing Agreement (DPA) can be established, and you can choose server locations in Germany or the EU.

Data from past interactions may already be part of Meta's training dataset. Filing an objection prevents future training with your data but cannot retroactively remove data that's already been processed. This is why establishing clear policies before employees use consumer AI is crucial.

Professional AI solutions using RAG (Retrieval-Augmented Generation) only reference your specific company data, product catalogs, and documentation. This eliminates the hallucination risk where Meta AI might provide incorrect information based on general internet training data. Your AI can only answer what it actually knows from your verified sources.

Stop feeding your customer data to Meta's training algorithms. Build a secure, GDPR-compliant AI consultation agent that knows your products and respects your data sovereignty.

Start Your Secure AI JourneyDisclaimer: This article does not constitute legal advice. Data protection laws change rapidly. Please consult your data protection officer for binding statements.